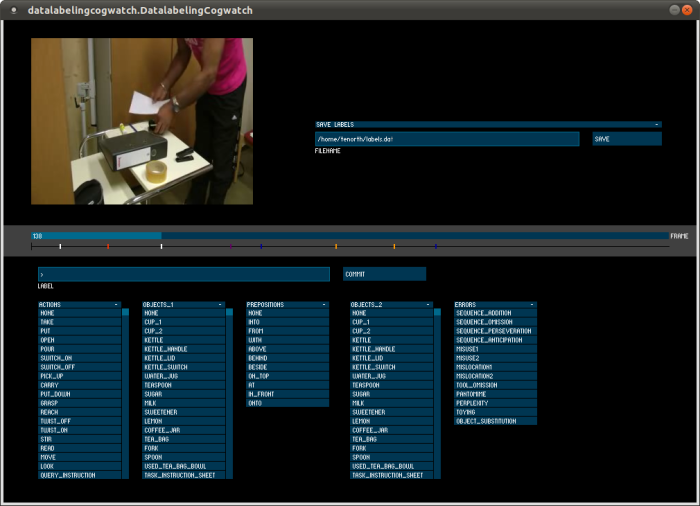

Labeling tool for human activity data

This tool allows to annotate observations of human activity data by setting markers in a timeline that indicate the end of a segment. Marker can describe e.g. the types of actions, objects the observed subject has interacted with, etc. The program then generates annotations for the frames since the previous marker. The set of marker types as well as mappings to be used when exporting the annotations can be configured using YAML files.

Installation

The program has been created using processing and controlP5 in Java and is available for Linux, Windows and MacOS. After download, just unpack the archive and start the DatalabelingCogwatch file.

The source code of this tool can be found at GitHub in the repository knowrob/data_labeling_tool. In Ubuntu, you need to have the following packages installed:

sudo apt-get install libgstreamer0.10-0 libgstreamer0.10-dev libgstreamer-plugins-base0.10-0 libgstreamer-plugins-base0.10-dev

To provide some test data to start with, the following archive contains a sample folder structure including a video, some first dummy annotations, and some configuration files.

For comments, questions and support queries please contact Moritz Tenorth.

Usage

- Start the program and select the video file to be labeled

- The program tries to load the configuration file from <filename>/../config/config.yaml

- If the config.yaml could not be found, it shows a dialog to search for it

- The program then looks for existing labels in the annotation folder relative to config.yaml

- Skip through the video frame by frame using left and right arrow keys

- Jump to a position by clicking in the timeline (slider)

- Start and pause playback using the space bar

- Set label markers at the end of a segment to assign a label to the segment before

- Export labels to a file (for formats see below)

- Load labels from file (just restart and use the same output folder)

- Jump between labels with pageup/down

- Remove marker with DEL, clear current label buffer with ESCAPE, commit current label buffer (i.e. set a label on the timeline) with ENTER

Folder layout

In order to facilitate the annotation and the processing of the resulting information, the following folder layout shall be used. Additional files and folders can of course be added, but the following are considered mandatory.

<sequence-id>

|- annotations (will contain the generated labels)

| |- models (will contain auto-generated BLN models)

| |- labels.dat (main label file)

| \- <label-seq>.owl etc (other file formats possible)

|

|- config

| |- config.yaml

| |- owl-class-mapping.yaml

| \- ...

|

|- mocap

| \- motion capture data (format TBD)

|

|- video

|- <sequence-id>.ogv (low-res version for annotation, OGG video format)

\- <sequence-id>.dv (high-res version as recorded)

Configuration files

The set of label categories and the label values can be configured using a set of YAML files in the config folder. Usually, such a set of configuration files needs to be created only once for a whole sequence of experiments and can be copied to each experiment data folder. Since these configuration files are stored inside the respective data folders, the label domains for the annotations are also inherently documented.

config.yaml

The format of the config.yaml is the following:

# list mappings between internal IDs and # displayed text for each category label-categories: actionIDs : Actions obj1IDs : Objects_1 # ... # Define which class denotes the action (becomes main index) action-category: actionIDs # define domain of label entries for each category label-values: actionIDs: - None - Take - Put # ... obj1IDs: - None - Cup_1 - Cup_2 # ...

owl-mapping.yaml

The labels used for the annotation are common English words – to generate action representations that can be used in the knowledge base, they need to be mapped against the concepts in the ontology, which are usually called slightly differently. This mapping can be defined in the owl-mapping.yaml file which contains one mapping per line, grouped into three categories (classes, properties, and individuals). Each of these categories needs to exist, though may be empty.

classes: put: PuttingSomethingSomewhere, take: TakingSomething, stir: Stirring, box: BoxTheContainer, bag: PlasticBag, bowl: Bowl-Mixing properties: with: primaryObjectMoving, into: toLocation, out_of: fromLocation individuals: cupboard1: cupboard23

Annotation file formats

All labels are stored as the concatenation of the different label-categories with a hyphen (-) in between. The annotation using a kind of 'restricted natural language' (i.e. action, object, and possibly preposition and another object) can easily be translated into relational representations and ontology-based annotations by defining a mapping between the identifiers in the ontology and the labels used by the human annotator.

labels.dat

1 32 Put-Sugar-Into-Cup_1-Sequence_omission 33 82 Put-Milk-Into-Coffee_jar-Misuse1 ...

In this format, there is one entry per marker that has been set in the labeling tool. The first number is the first frame in this segment, the second one the last frame, followed by the label (see above). This is the format that is used internally by the program to serialize and re-load a set of annotations.

If a labels.dat file is found in the output folder, it is automatically parsed and the markers are set accordingly. This can be very convenient for inspecting a set of labels, e.g. for checking correctness.

labels.dat.frames

<code> … Put-Sugar-Into-Cup_1-Sequence_omission Put-Sugar-Into-Cup_1-Sequence_omission Put-Sugar-Into-Cup_1-Sequence_omission Put-Milk-Into-Coffee_jar-Misuse1 Put-Milk-Into-Coffee_jar-Misuse1 Put-Milk-Into-Coffee_jar-Misuse1 … <code>

This format contains one label per video frame, each on a separate line.

OWL export

The OWL exporter outputs a description of the task in terms of action instances in OWL, linked to the respective objects and additional information. This file can be loaded into KnowRob and be used for reasoning, e.g. using the mod_ham package from the knowrob_human repository. For more information on the OWL representation of actions, have a look here.

BLOG export

This exporter outputs the data in terms of BLOG files that can be used for training Bayesian Logic Networks (BLN). It relies on having a complete mapping of all labels that appear in the data to OWL classes, properties or individual (see owl-mapping.yaml)